Framework for AI-First Products

Benevity

Moving beyond linear journeys to design multi-modal, non-deterministic systems.

Challenges

As we began integrating AI into our core product ecosystem, I noticed a critical breakdown in our design process. Our traditional artifacts such as linear user flows, static wireframes, and "happy path" blueprints were insufficient for the dynamic nature of AI.

We weren't just building screens anymore; we were building a system that had to handle:

Multi-modality

A user experience that fluidly traverses different interfaces -starting a task via a voice command in the car, continuing it through an SMS chatbot, and finalizing details on a dense web dashboard -shattering the concept of a single "screen flow."

Active Agents

A system that shifts from reactive to proactive. Instead of waiting for a user click, the system acts as an agent that monitors context and proactively nudges users, like suggesting a donation or flagging a risk before they even ask.

Non-determinism

An AI backend that operates on probabilities, not hard logic. It isn't always 100% confident in the user's intent, requiring us to design for ambiguity, confidence thresholds, and "best guesses" rather than just binary success or failure states.

An AI-First Design Framework

I developed and facilitated a 3-part strategic framework to bridge the gap between human-centered design strategy and AI technical architecture. This process moved us from designing pages to designing intelligent systems.

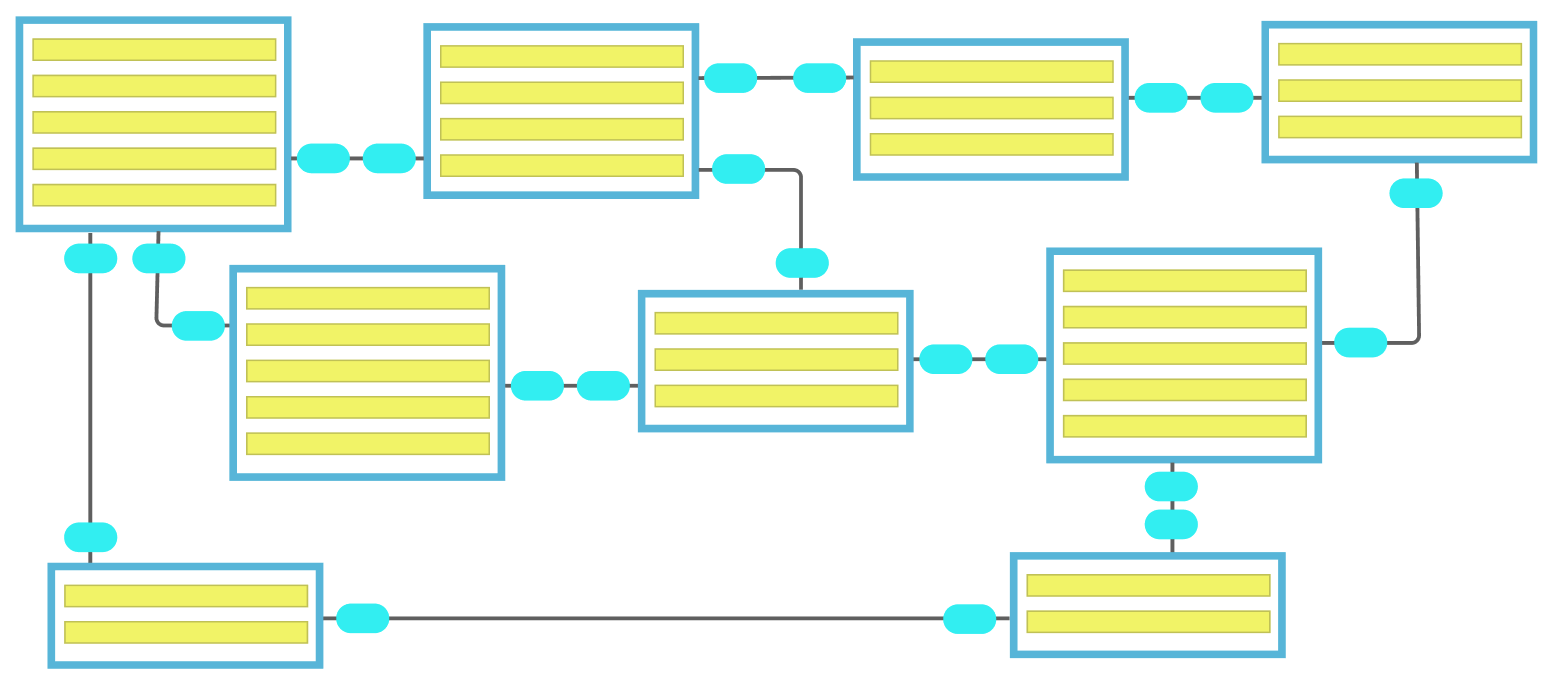

Phase 1: The System Model

From Passive Data to Active Agents

We started by aligning on the "nouns" of our system. Using an evolved Object-Oriented UX (OOUX) approach, we defined our core objects not just by what they are (data), but by what they can do (capabilities).

Key Insight: By defining objects as active agents capable of performing their own tasks, we decoupled the logic from the interface. This allowed us to build consistent, intelligent experiences regardless of whether the user was on a desktop or using a voice interface.

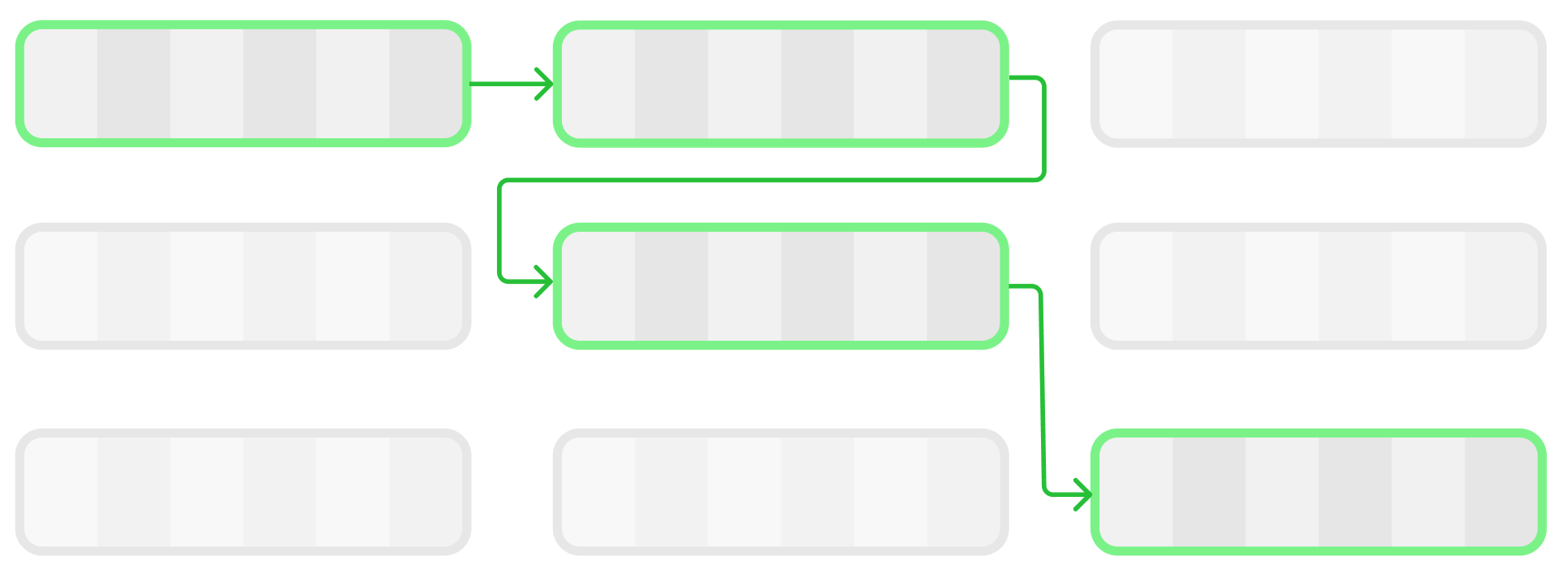

Phase 2:The Conversation Map

From Train Tracks to GPS Navigation

Traditional blueprints break when a user deviates from the path. I introduced Conversation Mapping, a method that treats interaction as a "Many-to-One-to-Many" flow. We mapped multiple inputs (Signals) to a single user goal (Intent), and explicitly designed "Fallback" paths for when the AI was uncertain.

Key Insight: We treated "uncertainty" as a core design surface. By scripting the "re-routing" logic (e.g., what happens when the system has low confidence?), we turned potential errors into trust-building service moments where the AI acts as a helpful partner.

Phase 3: The AI Charter

From Implicit Assumptions to Explicit Constitution

To ensure our AI acted as a trustworthy representative of our brand, I facilitated a high-level governance session with leadership. We separated the AI's Personality (its tone) from its Agency (its permissions).

Key Insight: We established binding rules for interaction, such as: "Our AI can be proactively suggestive, but never autonomous with sensitive user data." This empowered my design team to make decisions quickly, knowing exactly where the ethical guardrails stood.

The Outcome

This framework did more than produce artifacts; it transformed how our product team operates.

Engineering Alignment: Developers and designers now share a common vocabulary ("Objects" and "Capabilities"), drastically reducing translation errors during hand-off.

Velocity: By designing "Fallback" states upfront, we reduced QA cycles and edge-case bugs.

Trust: We moved from building "black boxes" to "glass boxes," giving stakeholders visibility into the AI's logic and ensuring our automation always served the human experience.